Solutions

goals

Resources

Resources

Turn their first time into a lasting habit with a clearer, stronger adoption strategy.

Product adoption sits at the intersection of product intent and real-world use.

And whether you live in the SaaS world, or are moving into the “bright new dawn” of modernization, despite different contexts, your underlying problems stay the same: are people using what we build?

People, it has to be said, don’t adopt products because features exist. They adopt when software helps them complete meaningful work with less effort, fewer mistakes, and growing confidence.

This guide focuses on shared principles that apply whether your product generates revenue directly or supports a larger operational goal.

You should leave this guide knowing what to prioritize, how to diagnose adoption breakdowns, and how to build momentum without adding friction for your teams or your users.

Product adoption is the ability for users to consistently reach value with your software over time.

In both cases, adoption answers the same question:

Are people able to do what they came here to do, without unnecessary help?

Adoption is not a moment tied to launch or onboarding. It is an ongoing system that either compounds value or compounds friction.

Most teams believe they have an adoption problem. In reality, it’s a prioritization problem.

If everything feels important, nothing gets adopted.

Before looking at tactics, it helps to be explicit about a few uncomfortable realities.

Low adoption is rarely the root issue. It’s the outcome of unclear value, competing priorities, or workflows that demand too much effort for the payoff.

When people struggle, stall, or revert to old behavior, it is usually because the system asks more of them than it gives back.

Documentation, walkthroughs, and live training can help motivated users, but they do not fix fragile systems. They increase effort instead of reducing it.

Adoption improves when the product carries more of the cognitive and procedural load.

Most adoption problems look different on the surface but share common causes underneath.

Users are asked to invest effort before they experience a meaningful outcome.

Experiences are built for highly motivated or highly technical users, leaving others behind.

Features, workflows, or rules arrive faster than users can absorb them.

Product, growth, operations, and CX each influence adoption, but no one owns success end to end.

Every improvement requires engineering time, approvals, or long release cycles.

When adoption stalls, teams often respond with more documentation or training. This increases effort instead of reducing it.

Regardless of industry or product model, successful adoption follows a predictable progression.

Users understand where they are and what matters first.

Users complete a meaningful action that proves the product is useful.

Users believe they can repeat that success on their own.

Users either deepen usage or complete tasks faster and with fewer errors.

The product becomes part of how work gets done, without constant assistance.

Adoption improves when each stage is supported intentionally instead of compressed into a single experience.

The most effective adoption programs rely on a small set of plays used with discipline.

Adoption breaks down when guidance is technically correct but contextually wrong.

Users don’t ignore help because they’re resistant, but because it isn’t relevant to what they’re trying to do in that moment. Effective adoption depends on showing the right guidance to the right users at the right time.

That requires basic targeting: simple signals of intent, role, or progress.

Adoption plays are not UI components. They are behavioral experiences. Each one exists to remove a specific kind of friction that prevents people from succeeding with software.

If you know what friction you’re dealing with, you know which play to use.

Friction they remove

“I don’t know where to start”

Orientation plays are for the moment when a user first arrives and is deciding, consciously or not, whether this product is worth their attention.

This only works when teams are clear on the first value moment they’re trying to guide users toward.

Their only job is to help the user choose a first meaningful action without second-guessing.

If orientation lasts longer than a few moments, it has failed.

Metric check:

If time to first meaningful action is high or many users never take a core action, orientation is doing too much or not enough.

Friction they remove

“I don’t know if I’m making progress”

Progression plays exist when value cannot be delivered in a single step. They help users keep moving forward instead of abandoning the process halfway through.

Their role is to turn a complex outcome into a series of achievable actions.

Progression works when it reduces uncertainty, not when it adds structure for its own sake.

Metric check:

If users start workflows but do not finish them, or drop-off clusters around the same step, progression needs tightening.

Friction they remove

“I’m stuck right now”

Contextual guidance is the most powerful adoption play because it helps users while the work is happening.

Its job is to reduce cognitive load at the exact moment a decision or action is required.

This is where teams eliminate the Training Tax. The product absorbs complexity instead of outsourcing it to people.

Metric check:

If error rates, retries, or support tickets spike around the same actions, contextual guidance is missing or poorly timed.

Friction they remove

“This doesn’t feel like it’s for me”

Personalization exists to prevent relevance decay as products serve more users, roles, or use cases.

Its job is not to make the experience feel clever. Its job is to remove unnecessary steps and decisions.

Personalization works because relevance is what sustains adoption. When guidance reflects a user’s intent or context, they move faster and are less likely to disengage. When it doesn’t, even good guidance gets ignored.

Personalization should simplify the experience. If it increases complexity, it is working against adoption.

Metric check:

If users skip guidance entirely or time to value varies widely between segments, personalization is too shallow or misaligned.

Friction they remove

“I’m not sure I did this right”

Reinforcement plays exist to stabilize confidence. They help users understand that their actions had the intended effect.

This is especially important when work has consequences, delays, or dependencies.

Confidence comes from clarity. Celebration is optional.

Metric check:

If users redo completed work or seek confirmation after finishing, reinforcement is unclear.

Adoption metrics are only useful if they tell you where users are struggling and what kind of help they need.

The mistake most teams make is tracking metrics in isolation. Completion rates, activation, or engagement look fine on dashboards but do not explain what to fix.

Strong teams treat metrics as diagnostic signals. Each one points to a specific kind of friction and maps directly to an adoption pattern.

What it tells you: Whether users understand where to start.

This is the earliest and most sensitive adoption signal.

If users take a long time to do anything meaningful, orientation has failed.

What it tells you: Whether users reach real value

Activation should represent a moment where the product proves itself.

Quick tip: High onboarding completion with low activation usually means users are finishing the steps you’ve defined, but those steps aren’t actually leading to a meaningful value moment.

Activation Rate Equation:

Activation Rate = (Number of users who reach activation ÷ Total new users) × 100

Average activation rate: 32%

What it tells you: How much effort users must invest before seeing results

Long time to value increases abandonment and support dependency.

Time to Value Equation:

Time to Value = Timestamp of activation - Timestamp of signup

Average time to value: 38 days

This is usually tracked as:

What it tells you: Where confidence breaks

Overall completion rates hide where users actually struggle.

What it tells you: Where users are confused or unsure

Errors are one of the clearest signals that guidance is missing or poorly timed.

What it tells you: Which processes are not self-sufficient

Support demand is a lagging but powerful adoption signal.

What it tells you: Whether help is relevant

Low engagement is not always bad, but how it shows up repeatedly matters.

What it tells you: Whether adoption is durable

Adoption is proven when users succeed again, faster, and with less help.

A simple set of diagnostic rules:

If users… | You should fix… |

|---|---|

Don’t start | Orientation |

Start, but don’t finish | Progression |

Finish incorrectly | Contextual guidance |

Ignore help | Personalization |

Redo work | Reinforcement |

Metrics should lead to decisions. If they do not, they’re decoration.

Most teams don’t realize they’re paying this.

The Training Tax shows up when a product or system technically works, but only after someone explains it.

You’ll recognize it when:

The product is usable, but not self-sufficient.

The Training Tax feels reasonable at first.

Over time, the cost compounds.

The system never earns independence.

In SaaS products, this shows up as stalled expansion and flat retention. In transformation initiatives, it shows up as operational drag that never fully goes away.

You are likely paying the Training Tax if:

These are signals that the product is asking users to carry too much cognitive load.

Teams that reduce the Training Tax shift effort from explanation to enablement. Reinforcement and guidance travel with the user, not just the interface.

They:

Training becomes reinforcement, not a requirement.

That is when adoption starts to scale.

Strong adoption strategies answer three questions clearly.

Practical principles:

Adoption improves when guidance is treated as part of the product experience, not an afterthought.

Adoption isn’t abstract. In practice, it shows up as measurable behavior changes users actually make and teams can act on. Below are real results from Appcues customers that illustrate how adoption patterns play out in different contexts.

A crisp first outcome anchors adoption. When users quickly experience value, everything downstream becomes easier.

After mapping and instrumenting user behavior with no-code tracking, GetResponse identified a dominant path to core value. By playing onboarding to push more users down that path, they saw:

Ideal adoption isn’t about showing features — it’s about guiding users through work they care about with minimal friction.

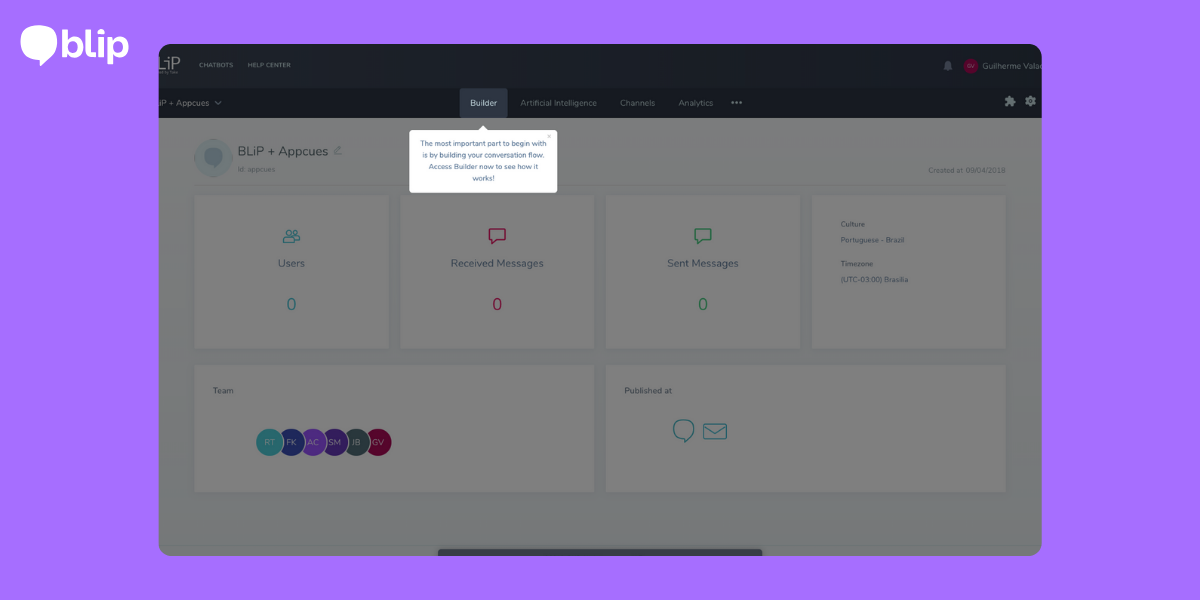

Blip replayed its onboarding flows so users could complete real tasks instead of learning the UI generically. That focus on workflow performance led to:

Confidence grows when actions clearly lead to outcomes. That reduces hesitation and repeated attempts.

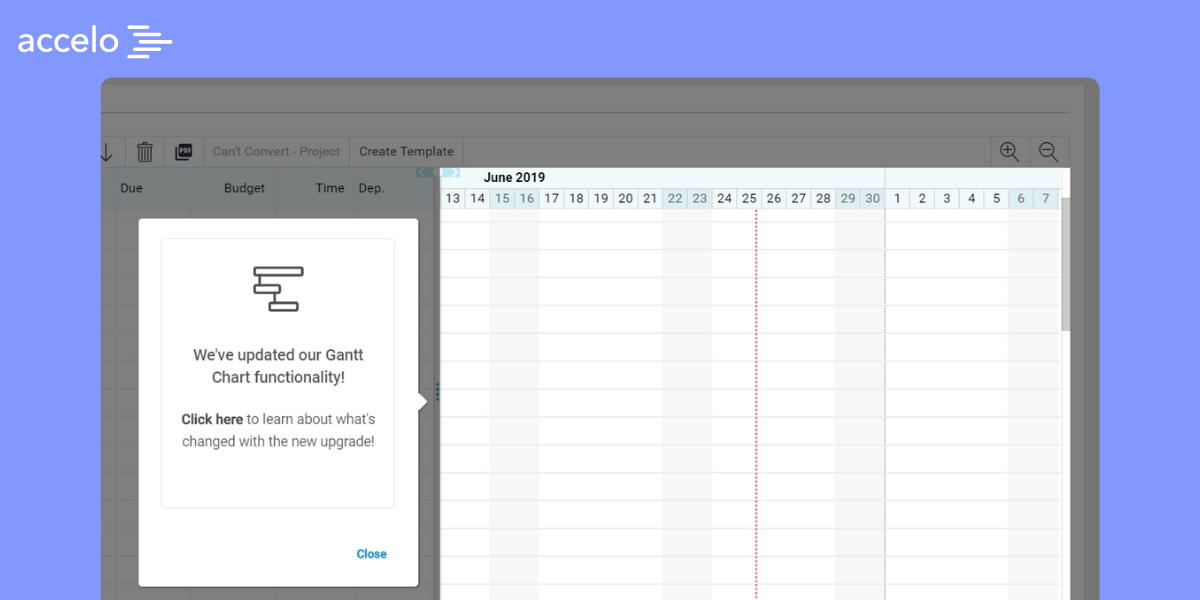

By using in-product help guides to reinforce correct actions, Accelo saw:

Great adoption flows don’t just improve metrics, they move business needles that matter to both PMs and ops leaders.

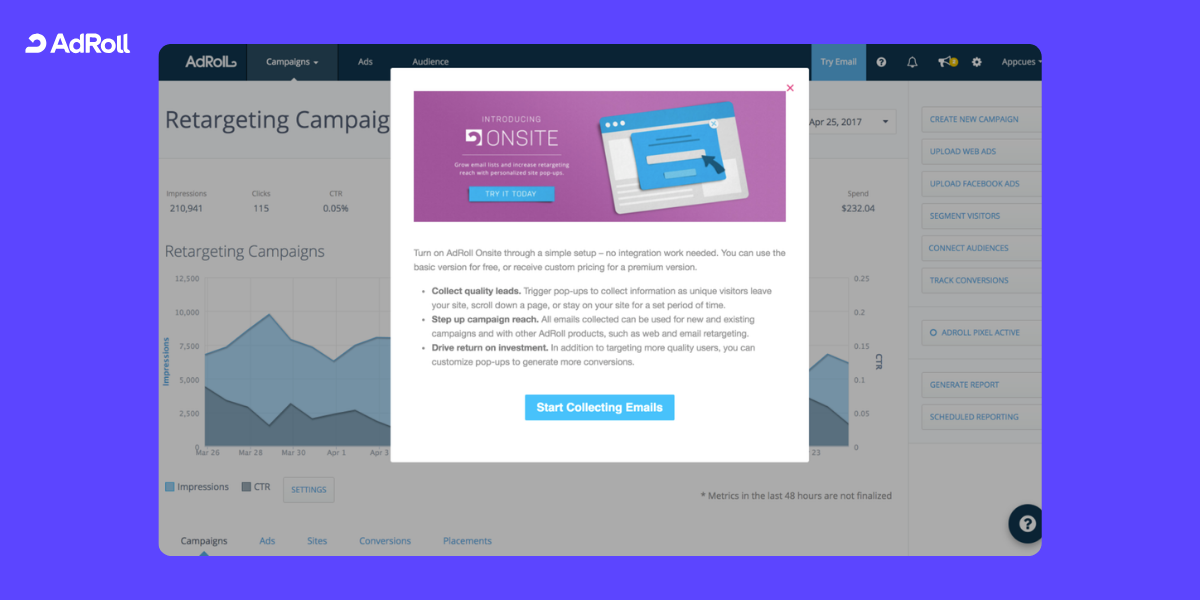

With tailored in-app experiences, AdRoll’s growth team:

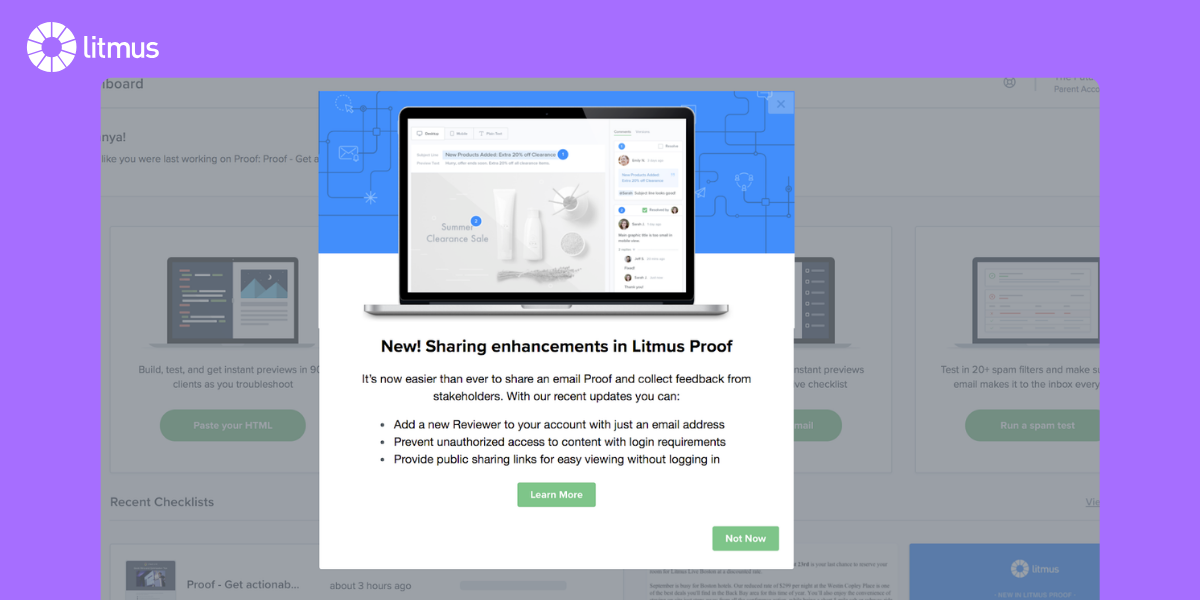

Litmus used targeted in-app messages and tooltips to promote important features. Results included:

Adoption rarely lives in one metric alone. The strongest adoption systems influence several behaviors simultaneously.

Across very different products and user bases, high-performing adoption systems:

These patterns are behavioral play principles that map to real results and business impact.

Ask:

If these answers are unclear, adoption is where to focus next.

If you’ve made it this far, you already know adoption isn’t a nice-to-have. It’s a multiplier: for engagement, retention, task success, and operational efficiency.

Depending on where you are in your adoption journey, here are three next steps crafted to keep momentum going.

If your biggest challenge isn’t awareness but removing friction across experiences, this blog digs into how teams play adoption systems that don’t slow down engineering:

→ Read: Digital adoption without bottlenecks

This is an ideal next read if you’re asking “How do we operationalize adoption at scale?”

For teams still wrestling with early stages of the user journey, and the delicate balance between activation and onboarding, our comprehensive guide goes deeper on: