Solutions

goals

Resources

Resources

Everything teams need to diagnose, build, and sustain engagement that actually lasts

User engagement is one of those terms that feels universally understood. It's self-explanatory, at least, on the surface.

But then someone inevitably asks: “How engaged are our users?”

The answers are rarely satisfying. You look at logins. Sessions. Feature usage. You even get a dashboard that looks busy and important, but doesn’t explain what’s really happening.

This guide exists to slow that moment down and make meaning from noise.

Consider this your re-introduction to user engagement, beyond a vanity metric or a growth buzzword. You'll re-contextualize a word that can mean anything, and leave with a system you can reason about, play for, and improve with confidence.

We’ve spent more than a decade working alongside product, onboarding, and customer teams who live with user engagement, and work through those problems every day. We’ll share what we’ve seen work, what reliably fails, and how teams like yours move forward without guessing.

If you’re trying to understand why users stall, drift, or disengage after a promising start, this guide is for you.

Want to skip straight to engagement plays? Click here.

Let’s start by grounding the term.

User engagement is how users are repeatedly getting value from your product.

Not opening it, or clicking around. Real value that relates to their goals.

This is where engagement gets misunderstood.

Teams often default to what’s easiest to measure: sessions, DAU/MAU, feature clicks. Those signals aren’t necessarily useless, but they’re incomplete. They tell you that users did something, not whether what they did mattered.

In short: user engagement is about progress, not presence.

Before we go any further, we need to address a common point of confusion: the difference between user engagement and customer engagement. Two similar terms, two different purposes.

User engagement focuses on what happens inside the product itself. It can be measured through a product health score and directly correlates to how a customer gets value out of the product.

Customer engagement is typically high-touch and relationship driven and can be measured via sentiment score. It’s the measure of how a customer gets value out of the company or the relationship at-large.

Here’s a table breakdown of what each category is made up of.

User engagement | Customer engagement |

|---|---|

How users learn as they go | Onboarding calls |

How they discover value on their own | Training sessions |

How they build habits | QBRs |

How the product guides them without a human in the loop | Proactive check-ins |

One-to-one guidance |

When teams blur these two, engagement gets unclear.

If usage only improves when CS intervenes, you don’t really have a user engagement strategy. What you’ve got is a manual safety net. It can save accounts, but it can’t scale indefinitely.

In reality, both are two sides of the same coin. Strong teams understand both of their functions as unique, and use them to reinforce one another. Together, they make up a more thorough and comprehensive view of customer health.

Engagement matters because it’s one of the clearest leading indicators you have that when users interact with your product or service, they're cleanly experiencing what you played for them.

Retention tells you what’s already happened, while user engagement is a crystal ball. You learn what’s about to occur, and that gives you time to change course before it drops customer satisfaction and impacts the business.

User advocacy isn’t something teams can manufacture with campaigns alone. It shows up when users consistently reach value, understand why it matters, and feel confident enough in the product to put their name behind it.

Advocacy can take many forms:

What these behaviors have in common is voluntary effort. Users aren’t just using the product; they’re extending their engagement beyond it.

Importantly, advocacy isn’t an early engagement goal. It’s a downstream signal that your engagement strategy is working. When users struggle to reach value or feel uncertain about their progress, advocacy stays quiet. When engagement is strong, advocacy emerges naturally.

For that reason, teams should treat advocacy as validation, not a lever. If you’re pushing for referrals or reviews before users consistently succeed, the underlying engagement loop likely needs attention first.

Most engagement problems don’t come from lazy users or strong feelings. They’re born from constraints: time, data, ownership, and clarity.

Over and over, we’ve seen growth teams face the same problems. Here’s what’s most common:

Teams track what’s easy, not what’s meaningful. Dashboards fill up, but decisions don’t get easier.

Teams chart a course for users around what the product should do. Which means, more often than not, teams fail to consider or incorporate actual user priorities.

Different roles, goals, and maturity levels are flattened into a single experience. Relevance disappears.

If user engagement depends on emails, nudges, or check-ins, it’s brittle. The product isn’t doing enough of the work.

Users interact and learn just enough to get by, then stop expanding. They never reach power user status.

When user engagement drops, the debrief is full of “My guess is…”. Without a shared view of the journey, user behavior is up to interpretation. And that’s not strong enough to build a strategy on.

These problems are common (and fixable), but only if you can recognize which ones you’re dealing with.

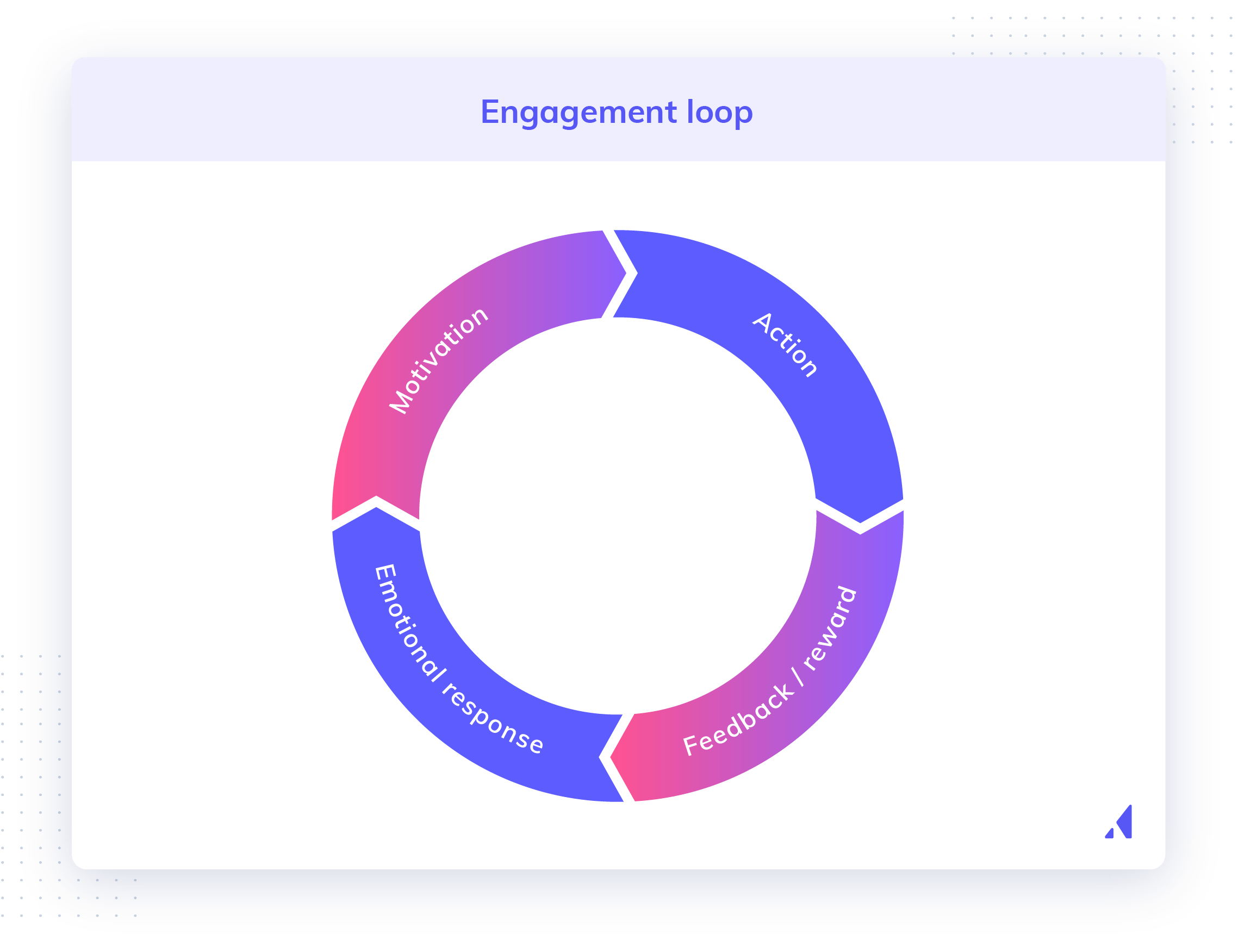

Engagement becomes easier to work on when you go beyond the numbers and treat it as a loop.

When engagement is low, odds are that one of these steps is weak.

Instead of asking “How do we get users to do more?”, ask:

When you ask these questions to analyze user behavior, you flesh out the details. This exercise helps you diagnose your problems, so you have a better understanding of how to improve user engagement.

There’s no single way to boost user engagement.

What we’ve seen, again and again, is that teams who get this right don’t rely on clever nudges or constant messaging. They focus their attention on building a product experience that makes it easier for users to keep going.

Most engagement problems come down to a small number of experience plays, rather than product features or tools.

⚡️ Quickstart: You don’t need all of these at once. In fact, most teams move the needle fastest by starting with Repeat Value and Progress, then layering in the rest only when needed.

Use this play when

Users get value once, then disappear.

What users feel after the play:

“Oh! This is something I should come back to.”

Repeat Value plays help users understand what comes after their first success.

A lot of products are good at getting users to an “aha” moment. Fewer go a step further to show why that's not the end of the story.

This play is about answering one quiet question every user has:

What should I do next and why does it matter?

In practice, this looks like:

If users activate but don’t come back on their own, your repeat value isn’t clear yet. Messaging may drive spikes, but the most real and reliable indicators are sustained active users.

After a user succeeds once, the product often goes quiet. The experience feels “done,” so teams try to restart momentum later with emails or reminders.

That works sometimes. But it doesn’t last.

Look closely at what happens right after a user succeeds. If the product doesn’t clearly point to what comes next, users won’t find a reason to return.

If you can’t answer “what’s the next useful thing?” inside the product, engagement will stay unpredictable.

You’ll know it’s working when

Users come back and repeat the same valuable action, without being chased.

Use this play when

Users start strong but slow down, or stop shortly after onboarding.

What users feel after the play:

“Okay, I’m getting somewhere.”

Progress plays help users understand whether their effort is paying off.

They’re especially important when:

This play exists to reduce doubt. It not only keeps users engaged, but answers: am I on the right track?

If users start onboarding but don’t reach deeper usage (or take a long time to get there), that means the signals you've built for progress aren't pointing the way clearly enough.

Progress in this play often looks like a list of steps to complete. Users finish them, but don’t feel more confident or capable afterward. Just productive.

That’s when user engagement drops, right after “completion.”

Progress should help users feel closer to what they’re trying to achieve.

If finishing a step doesn’t make someone feel closer to their goal, it isn’t pulling its weight.

You’ll know it’s working when

Users move from early actions into deeper, more meaningful use without needing a push.

Use this play when

Users abandon or hesitate at the same steps again and again.

What users feel after the play:

“That helped and it showed up at the right time.”

Contextual guidance helps users get unstuck while they’re already working.

Instead of sending people to docs or training, this play supports them right where the problem appears.

It’s especially useful when the product, and its key features, can’t be simplified, and is inherently more complex.

When your guidance works, fewer users get stuck in the same places and you’ll see a reduction in issues escalated to human support.

Guidance piles up. Tooltips litter the landscape. Messages line the screen like the Las Vegas strip. Eventually, users stop seeing any of it.

Good guidance is quiet and specific.

It shows up when someone hesitates, helps them take action, and then gets out of the way.

If engaged users are ignoring guidance, there’s probably too much of it or it’s showing up at the wrong time.

You’ll know it’s working when

Those steps get smoother, faster, and need less human help to improve user engagement.

Use this play when

Users stick to the basics and never explore beyond their habit loop.

What users feel after the play:

“That would’ve helped me earlier.”

Feature discovery helps users grow into more advanced ways of using the product.

Done well, it doesn’t feel like learning new features, but more like finding a shortcut.

Healthy discovery shows up as changes in behavior, not just clicks. If users try features once and revert to basics, revisit your timing or framing.

Features get introduced because they launched, not because the user needs them yet.

New features land best when they solve a problem the user is already feeling.

If a feature doesn’t clearly help with what someone is doing right now, it’s too early.

You’ll know it’s working when

People adopt features and change how they work, not just what they click.

Use this play when

Users go quiet without clearly failing or churning.

What users feel after the play:

“Right, that’s what I was trying to do.”

Re-engagement helps users pick things back up after they drift away.

It works best when it reminds people of progress they have already made, not what they failed to do.

Re-engagement works when users return and keep going. If activity spikes briefly and drops again, the underlying customer experience needs attention.

Re-engagement becomes the main way engagement happens. Usage spikes, then drops, then spikes again.

That’s a sign the product experience isn’t carrying enough of the load.

Re-engagement works when users come back to something clear and useful.

If every return depends on another message, the problem lives upstream.

You’ll know it’s working when

Users return, make progress, and don’t need repeated reminders to keep going.

One of the hardest parts of user engagement is knowing who owns what.

This reference table exists to support alignment, and clarify who leads each play plus how teams typically review and maintain them over time.

Play | Primary lead | Key partners | Review trigger | Watch for |

|---|---|---|---|---|

Repeat Value | Product | CS, Customer Marketing | Workflow changes, retention dips | Return usage depends on reminders |

Progress & Momentum | Product | CS, UX | Activation or drop-off reviews | Steps completed without confidence |

Contextual Guidance | Product | Support, CS | Repeated friction or support themes | Help becoming background noise |

Feature Discovery | Product, Product Marketing | Customer Marketing | Feature releases, adoption stalls | Discovery driven by launch timing |

Re-engagement | Customer marketing | Product, CS | Inactivity spikes, trial drop-off | Messaging compensating for experience gaps |

Clear ownership helps prevent gaps, but engagement work rarely succeeds when it’s treated as a series of handoffs.

Most engagement problems sit at the intersection of Product, Customer Success, and Customer Marketing. Product sees behavior, CS hears friction, and Marketing reinforces direction but improvement stalls when each team works from a different diagnosis.

Strong collaboration starts by aligning on what’s breaking in the user experience, not on which team should act.

Before choosing a play or launching changes, teams should agree on:

When those answers are shared, ownership becomes an accelerator instead of a boundary.

A simple way to collaborate without adding a heavy process is to anchor discussions around behavior, not solutions.

When reviewing engagement, ask together:

These questions help teams converge on the same problem, even if they contribute in different ways.

When teams surface several engagement problems at once, prioritization often turns into a debate about urgency or ownership.

Instead of ranking ideas, prioritize problems using these questions:

The goal isn’t to solve everything. It’s to choose the issue that, once addressed, makes the rest easier to reason about.

Collaboration tends to break down when:

These are usually signals that prioritization happened too late or not at all.

If you don’t measure engagement, everything feels like progress.

But is any of it actually helping users?

This is where tracking user engagement comes in. Rather than a metrics scoreboard, it’s a way to understand whether users are moving closer to value or quietly drifting away.

The goal isn’t to track everything, just the few signals that tell you whether engagement plays are doing their job.

Before looking at dashboards, get clear on what engagement means for your product.

Ask:

Those answers should shape your metrics, not the other way around. If a metric doesn’t help answer one of those questions, it’s probably noise.

What it tells you

Are users continuing to interact with the parts of the product that matter after activation?

Engagement rate helps you understand whether users are building habits around value-driving actions, not just showing up once. It’s most useful for spotting shallow usage that doesn’t turn into sustained behavior.

How to define it

Engagement rate only works when it’s tied to a specific, repeatable action that represents ongoing value.

Avoid defining engagement as “logged in” or “opened the app.”

Use engagement rate to:

Common signals:

This is typically reviewed in an engagement or active usage report that tracks how often users repeat a defined value-driving action over time.

Engagement rate tells you where momentum breaks, not why.

Optimizing engagement rate in isolation can be misleading. Pair it with retention and activation to make sure engagement reflects real value, not busywork.

Engagement Rate Equation

Engagement Rate = (Users who performed an engagement action ÷ Total eligible users) × 100

This is typically measured over a fixed window, such as weekly or monthly.

What it tells you

Are users reaching meaningful value?

Activation is the most important engagement metric, but only if it’s defined correctly.

If activation is low, engagement plays earlier in the journey aren’t doing enough to guide users to the right actions.

If activation is high but retention is low, users may be reaching value once, but not seeing why they should return.

Activation rate is usually pulled from signup-to-activation funnels that show how many new users reach your defined “first value” moment.

Activation Rate Equation:

Activation Rate = (Number of users who reach activation ÷ Total new users) × 100

Average activation rate: 32%

What it tells you

How long it takes users to experience their first win.

Time to value measures the gap between signup and activation. The longer that gap, the more chances there are for confusion, distraction, or drop-off.

Look for friction you can remove, delay, or simplify.

Shortening time to value often has more impact than adding new engagement tactics. If users get value faster, many engagement problems resolve themselves.

Time to value is calculated by comparing signup timestamps with activation events, often surfaced in cohort or lifecycle timing analyses.

Time to Value Equation:

Time to Value = Timestamp of activation - Timestamp of signup

Average time to value: 38 days

What it tells you

Whether value is repeatable.

This is one of the clearest engagement signals, but often overlooked.

Return usage asks:

• Do users come back on their own?

• Do they repeat the action that mattered?

If users activate but don’t return, focus on Repeat Value plays before adding re-engagement campaigns.

Messaging can encourage a return. Only the product can make it stick.

Return usage is most often reviewed in retention or cohort views that show whether users repeat a core action after their initial success.

Return Usage Rate Equation

Return Usage Rate = (Users who repeat a core action within 7 days ÷ Users who activated) × 100

You can adjust the time window based on your product (daily, weekly, monthly).

What it tells you

Whether users are engaged enough to invest effort back into the product.

Feedback participation is a signal of confidence. Users who consistently reach value are more likely to respond to NPS, surveys, or in-product questions — not because they’re asked, but because they feel their experience is working and worth reacting to.

This metric helps distinguish between passive usage and engaged users who have opinions, expectations, and intent.

This is typically measured over a defined window (e.g., after activation, post-milestone, or quarterly).

Use feedback participation to:

Common signals:

This metric is usually reviewed by comparing survey or NPS responses against recent product usage and progress toward value, rather than looking at response volume alone.

Feedback participation doesn’t measure satisfaction or loyalty on its own. A high response rate without strong engagement often signals friction or confusion, not success. Context matters.

Feedback Participation Rate Equation

Feedback Participation Rate = (Users who submit feedback ÷ Users who were eligible to give feedback) × 100

What it tells you

How deeply users are involved with your product.

Depth can show up as:

• number of meaningful actions completed

• use of advanced or secondary features

• expansion into broader workflows

Shallow usage often points to unclear progress, poor discovery, or features being introduced too early.

Depth improves when users understand why the next step matters — not when they’re shown everything at once.

Engagement depth shows up in feature usage or behavior summaries that reveal how broadly and frequently users interact with meaningful parts of the product.

Engagement Depth Metric Equation

Engagement Depth = Average number of meaningful actions per active user

or

Advanced Feature Adoption = (Users who use feature ÷ Eligible users) × 100

What it tells you

Where engagement breaks down.

Overall engagement numbers can hide important details. Step-level drop-off shows you exactly where users hesitate, abandon, or get stuck.

This metric pairs directly with Contextual Guidance.

When a specific step consistently loses users, that’s a signal, not a failure. It tells you where focused guidance or simplification will have the most impact.

Step-level drop-off is identified in funnel or path analyses that highlight where users abandon a workflow or hesitate between steps.

Step-Level Drop-off Rate Equation:

Step Drop-Off Rate = (Users who reached step − Users who completed step) ÷ Users who reached step × 100

What it tells you

Whether re-engagement leads to sustained use.

The real question isn’t “Did they come back?”

It’s “Did they keep going?”

If re-engagement leads to brief spikes followed by drop-off, the underlying experience needs attention.

Re-engagement works best when it reconnects users to a clear next step — not when it tries to create motivation from scratch.

Re-engagement effectiveness is assessed by combining re-entry activity with follow-on usage, usually by checking whether returning users reach value again after coming back.

Re-engagement Effectiveness Equation

Re-engagement Rate = (Inactive users who return and reach value ÷ Inactive users contacted) × 100

To assess quality, pair it with:

Post-Re-engagement Retention = Users still active after X days ÷ Re-engaged users

Engagement metrics are most useful when they’re tied to specific plays, not tracked in isolation.

Engagement play | Metrics |

|---|---|

Repeat Value | Return usage, early retention |

Progress & Momentum | Activation rate, time to value, early drop-off |

Contextual Guidance | Step-level drop-off, error rates, support volume |

Feature Discovery | Feature adoption, depth of usage |

Re-engagement | Reactivation rate, post-return retention |

If you’re tracking a metric but can’t name the play it supports, it’s probably not actionable yet.

Benchmarks can be helpful, but they’re not the goal.

A “good” engagement rate for one product might be terrible for another. What matters most is whether your metrics are:

Use benchmarks for context and trends for decisions, and as solid ground to measure your baseline against. Collect customer feedback continuously to understand not just the numbers, but the psychology and feeling that comes from your strategy.

A few guardrails teams find helpful:

If a metric sparks discussion and leads to a decision, it’s doing its job. If it only shows up in slides, it probably isn’t.

Engagement metrics only help if they’re reviewed at the right rhythm.

Too often, teams either:

The goal? Early visibility without noise.

Most teams don’t need a complex cadence. Two simple check-ins are usually enough.

Weekly reviews are about answering one question:

Are users getting stuck right now?

This serves as a quick scan for friction, without turning the touch base into a performance review.

Look at:

If something spikes, dips, or repeats, that’s your signal. Pick one issue and move on.

Monthly reviews are where the data starts showing trends you can act on.

This is where you step back and ask:

Are users coming back, going deeper, and sticking around?

Look at:

This is also the right moment to sanity-check your engagement plays:

If a metric:

it probably doesn’t need to be reviewed that often.

Metrics are there to support judgment, not create process.

Good engagement metrics don’t show whether users like your product. They tell you whether users are moving closer to value and choosing to stay there.

When metrics are tied to engagement plays, teams stop guessing and start learning. And that’s when you’ll find confidence replacing anxiety.

Most teams don’t have just one engagement problem. They see low activation, shallow usage, drop-off in key steps, quiet users, and weak advocacy — all at once.

Trying to address all of it simultaneously usually creates noise, not progress.

Instead of asking “Which metric should we fix?” start with a simpler question:

Where does momentum break earliest for most users?

Engagement problems compound. When early momentum is weak, downstream issues are inevitable. Prioritization works best when you focus on restoring the first broken link in the engagement loop.

When you’re facing multiple issues, use this order of operations:

This sequence mirrors how engagement builds in real life: value first, then repetition, then depth, then advocacy.

You’ll know prioritization is working when:

Engagement improves fastest when effort is focused, sequential, and grounded in real user behavior.

Teams rarely struggle with engagement because they are inactive. They struggle because effort accumulates without direction. Messages go out when usage dips. Features get promoted when adoption looks soft. Guidance is added wherever users seem confused.

Each response makes sense in isolation. Together, they rarely form a strategy.

User engagement strategies exist to answer a simpler question: what should we focus on next, and why?

User onboarding usually has a clear activation moment. Engagement needs the same clarity for what comes after.

Before choosing plays or metrics, get specific about what ongoing success looks like in real behavior. What do engaged users who stick around actually do, repeatedly? What actions show up early for loyal customers but not for disengaged ones?

This definition doesn't need to be perfect, but it does need to be shared. Without it, engagement defaults to proxies like clicks, opens, or completions. Those are easy to measure, but they rarely tell you whether users are succeeding.

Once ongoing success is clear, work backward and name where users fall short.

Most teams already know the answers:

These aren't abstract engagement problems. Each is a specific breakdown in momentum. Good user engagement strategies should be built around fixing these moments, not around adding more activity everywhere.

Engagement plays work best when they're applied deliberately and with intention.

If users… | Then the problem is… |

|---|---|

Activate once and vanish | Repeat value |

Start but stall | Progress |

Abandon specific steps | Friction |

Plateau | Discovery |

Drift away quietly | Re-engagement |

Trying to address all of these at once usually creates noise. Teams learn faster when they commit to one play, apply it carefully, and watch how behavior changes.

As a rule, anything that needs to happen for most users should live in the product itself. That includes showing what to do next, helping users recover when they get stuck, and reinforcing progress toward meaningful outcomes.

Out of app messaging works best when it can invite users back or highlight changes. But it has its downsides: it struggles when asked to explain value or guide core behavior on its own. Using out of app messaging for the sake of it, without basing its use on what people are or aren’t doing in your product, creates a delta.

If engagement depends heavily on external messaging, that's usually a signal that the product experience needs attention and should be carrying more weight in-app.

Engagement is not something you finish. Like onboarding, it improves through small, focused changes tied to real behavior. Teams try something, observe what engaged users do, and adjust.

Metrics matter here only insofar as they help you see whether users are moving closer to the behavior you defined as success or further away. The goal is not to prove progress, but to learn while a user's engagement momentum, or lack thereof, is still visible.

Strong user engagement strategies make the product clearer, not louder.

It:

For teams that are struggling, this is not a promise for quick wins. It offers something more useful: a way to decide what to work on next, with confidence that the effort is tied to how users actually succeed.

Every team that takes user engagement seriously eventually runs into the same question:

Should we build this ourselves, or should we buy a tool?

This section is here to help you make the decision intentionally, based on what kind of engagement work you need to do right now.

Building user engagement into your product can be the right choice, especially early on.

Teams tend to build when:

In these cases, building can be faster in the short term. Everything lives in one codebase. There’s no extra system to learn or maintain and decisions stay close to the product.

Where building works best is when control is deeply important. You can play exactly what you want, where you want it, without compromise.

Where it tends to struggle is iteration. As user engagement needs evolve, small changes often require planning, development, review, and deployment. What starts as a simple adjustment can take weeks. Over time, engagement work slows down or gets deprioritized entirely. And that impacts user satisfaction, and ongoing work.

Buying usually becomes attractive when engagement stops being a setup problem and starts being a growth problem.

Teams tend to buy when:

At this stage, the challenge isn’t knowing what to build. It’s being able to change it fast enough.

The main advantage of buying is speed. Changes can be made quickly. Experiments are easier to run. Engagement becomes a process teams can actively improve instead of something they ship once and leave alone.

The tradeoff is ownership. Tools come with constraints. Teams need discipline to avoid overusing them. Without a clear strategy, it’s easy to add more user engagement without improving outcomes.

Instead of only asking “Should we build or buy?” try starting with these questions:

If most of those are true, buying usually makes sense.

If engagement needs are simple, stable, and tightly coupled to core logic, building can be the right call.

Neither choice is permanent. Many teams start by building, then buy later when engagement becomes more strategic. Others buy early, then build custom pieces as needed.

What matters is choosing based on your constraints, not on ideology.

Consideration | Build in-house | Buy a platform |

|---|---|---|

Control over UI logic | ✅ Full control | ⚠️ Constrained by platform |

Deep workflow integration | ✅ Native by default | ⚠️ Depends on tooling |

Speed to firsts version | ⚠️ Slower | ✅ Faster |

Iteration speed | ❌ Dev-dependent | ✅ Low code |

Experimentation | ❌ Costly | ✅ Designed for it |

Personalization at scale | ⚠️ Complex | ✅ Built-in |

Measurement & analytics | ⚠️ Custom work | ✅ Included |

Long-term maintenance | ❌ High | ⚠️ Vendor dependency |

Early-stage flexibility | ✅ Strong | ⚠️ Can be overkill |

Mature product scalability | ⚠️ Hard | ✅ Designed for scale |

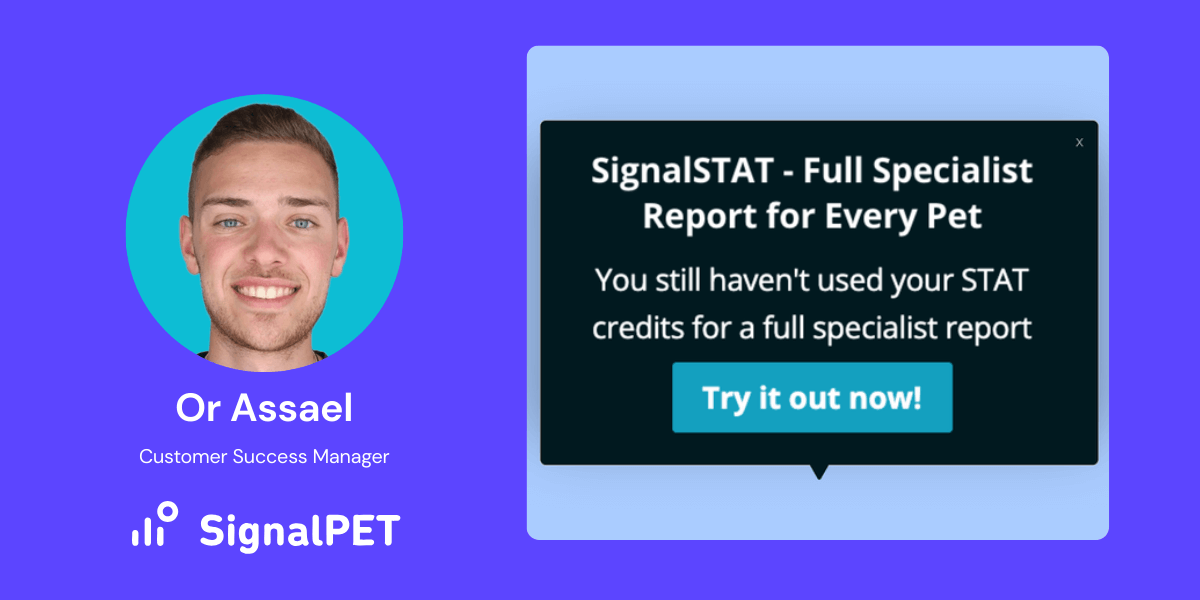

SignalPET helps veterinary teams monitor pets between visits using ongoing health data. Early engagement looked strong, since clinics and pet owners could complete their initial setup and see value fast. The challenge, though, came from sustaining user engagement over time.Users understood the concept. What was less clear was how their early actions translated into ongoing, repeat value.

After the first successful interaction, the experience didn’t do enough to reinforce why returning mattered. Users had value once, but the product wasn’t consistently showing how that value accumulated over time.This was a continuity problem.

The team focused on reinforcing repeat value rather than pushing re-engagement.

They:

Instead of asking users to come back, the product showed them why coming back made sense.

Engagement improved because users could see continuity. Each interaction felt connected to the last, and future value felt easier to anticipate.

Users returned because the product made progress visible over time, not from reminders.

Repeat Value plays work when they help users understand that success compounds. If users get value once but don’t come back, the experience likely isn’t doing enough to show how today’s action connects to tomorrow’s outcome.

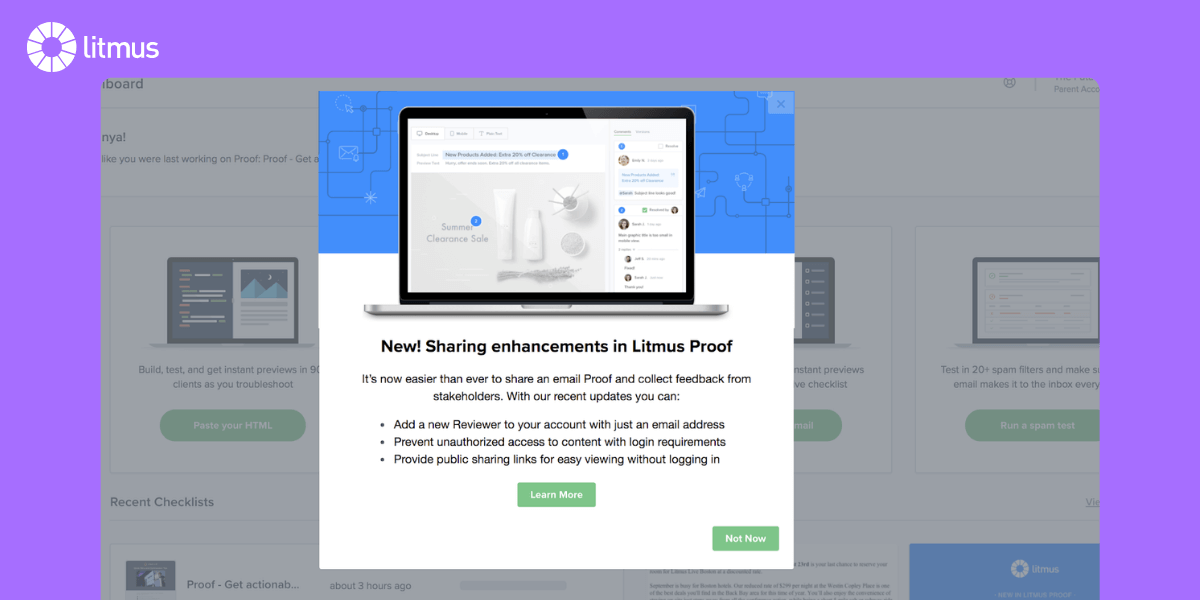

Users were active and comfortable with the basics, but engagement plateaued. Advanced features were rarely adopted, despite the time and effort the product team had invested in them.

Feature discovery followed release timing, not user readiness. Which meant in practice, features were visible, but easy to ignore because users didn’t yet understand why they mattered. Only that there was suddenly a new thing to learn.

This issue came down to timing.

The team narrowed their focus to discovery to improve user engagement and feature adoption.

They:

Instead of asking users to come back, the product showed them why coming back made sense.

Users encountered features only when they had the context to care. That meant discovery felt helpful, not distracting, and active users had a deeper, more meaningful connection to keep them learning.

Feature Discovery works when it follows progress. Showing features too early creates noise, while showing them when they’re relevant creates meaningful user engagement.

Xometry connects buyers with manufacturers, and the core action that matters is placing an order. While users were signing up and exploring, many stalled before completing that step. They had intent, but the experience wasn’t consistently helping them move from consideration to action.

Although users engaged, this revealed an in-product follow-through problem.

Users would. move through the product fine until they hit friction and uncertainty at decision points inside the product, specifically when they would become paying customers.

The team made sure key information and reassurance were part of the experience, but it lived outside the moment when users needed it most. As a result, users hesitated, deferred action, or dropped off entirely.

Users already wanted to place orders. But the product wasn’t actively guiding them through the moments that required the confidence they needed to act.

Xometry focused on contextual guidance at high-intent moments.

They:

The messaging was tightly scoped. It showed up only when users reached a decision point and disappeared once the action was taken.

These were engaged users, and they didn't need more reminders or external prompts. What was missing was support in the moment of action. By placing guidance inside the workflow, Xometry helped users move forward without breaking focus or sending them elsewhere for answers.

User engagement improved because the product reduced hesitation at the exact point where users were already leaning in.

Contextual Guidance works when it removes friction where intent already exists. If users want to act but don’t, the most effective engagement often happens inside the workflow, at the moment of decision.

User engagement matters, and if you’ve made it this far, congratulations: you have a full understanding of the landscape you're working in.

But now it's the moment to move from ideas to confidence.

Here are the next steps teams usually take, depending on what they need most right now.

Remove adoption bottlenecks before they turn into engagement problems

Learn how teams scale digital adoption without adding friction or manual work.

→ Read how to scale digital adoption

See how real teams put these engagement plays into practice

Explore how product-led teams use Appcues to improve activation, adoption, and retention.

→ Explore customer stories