Yup, you can A/B test your user onboarding Flows

.png)

.png)

How often do you hear “it’s worth a test” during your work week? If you work in SaaS, I’d bet at least a dozen times. Makes sense! In an industry built to fail fast and learn faster, testing has become hard-coded into the culture—one that advocates for learning-focused failures as a path to success.

In today’s climate (read: in this economy) where teams are demanded to increase output with fewer resources, a solid testing strategy can shed light on what work drives the most impact. It also takes the guesswork out of decision-making, shifting that nagging inner dialogue from “I think” to “I know” (and boy, does that data-driven confidence do wonders for imposter syndrome 🤗).

Want to ensure that every change produces positive results? Then continually testing and benchmarking performance is a small price to pay. Do your users (and bottom line) a favor and pay it.

Of all the different UI-centric testing methodologies, the humble A/B test reigns supreme. It’s by far the most well-known test-type, but its definition can vary depending on your industry. In its simplest form, an A/B test shows 2 different versions of something to a user and then measures which one performs better.

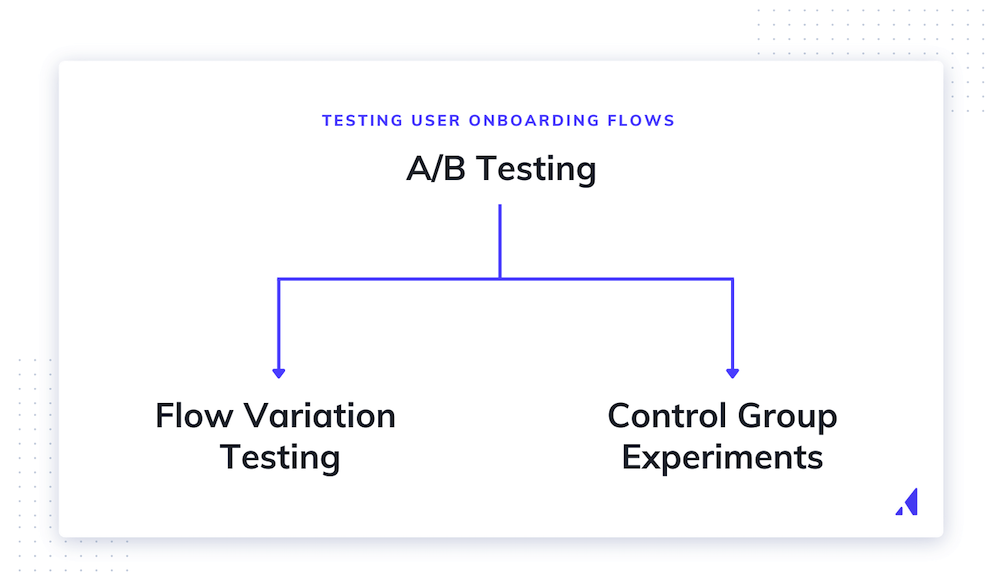

Here at Appcues, we often think of A/B testing as it relates to user onboarding. In that sense, A/B testing is an umbrella term housing 2 different testing categories:

Whichever flavor of A/B testing you’re sampling, your goal remains the same: Gain insight into how users engage with your product to test a hypothesis for improvement. Well-crafted A/B tests quantify the impact of UI changes on customer interactions and validate—or invalidate—your hypothesis.

Now, let’s put that science into context by examining an IRL example of Instagram’s latest UI test.

Instagram recently rolled out a version of its app allowing select users to change the way they display images and videos. Adam Mosseri, Head of Instagram, took to social media and explained his testing hypothesis: the future of video and photo is both mobile-first and immersive, so testing full-screen media is a step towards this new reality.

In response to some harsh feedback from a handful of power users (see: Kylie Jenner and Kim Kardashian to name a few) and a change.org petition with over 300,000 signatures to “bring attention to what consumers want from Instagram”, Instagram walked back its UI changes. In an interview with Platformer, Mosseri said:

“I'm glad we took a risk — if we're not failing every once in a while, we're not thinking big enough or bold enough but we definitely need to take a big step back and regroup.”

Of course, redesigns can always frustrate users who are reluctant to change—so it’s best to avoid basing decisions on a vocal minority. But Mosseri said this decision was backed up by Instagram’s internal data—which is exactly why we test, folks. To recap:

We’re staunch advocates that user onboarding is the most important part of the customer journey. Customers won’t stick around if they can’t learn how to use your product quickly—and, as evidenced by the great Instagram UI debacle of 2022, even power users will drop you if they dislike your new UI.

According to our friends at Mixpanel, SaaS companies that notch a retention rate above 35% in the first eight weeks are classified as the onboarding “elite.” (Sorry, customer acquisition teams, but that means, on average, ~70% of your acquisitions are DOA in their first 2 months of onboarding ☠️).

With tightened budgets, rising churn numbers, and only one shot at making a good first impression, designing an effective onboarding experience can be straight-up intimidating. But fear not! That’s where testing comes in handy.

The cycle of testing, analysis, and optimization deepens your understanding of your user base. With every test, you’ll learn more about your users—arming your team with the valuable insights they need to design for positive growth.

Remember: Pokemon always evolve into better Pokemon. The same goes for your product: every iteration should evolve into a better user experience.

Unfortunately, user onboarding is still one of the most neglected areas of testing. But that also means there’s a lot of opportunity (and upside) for companies willing to put in the work. By continually A/B testing your user onboarding Flows, you can:

The recipe for creating an outstanding product experience hinges on prioritizing and shipping the most impactful initiatives gleaned from your testing insights.

Appcues’ no-code platform was purpose-built to create personalized onboarding experiences at scale—without having to wait on a developer. That means it’s never been easier to introduce in-app onboarding content. So you know those smug product management books that wax poetic about rapid, iterative testing? Well, this is the kinda testing-poetry they’re talking about.

But with great (iterative) power comes great responsibility—and before you set up your A/B test, you’ve got to check that your target audience is large enough to produce statistically significant results. Generally, the larger the group the better, but best practice is to have at least 500 users in each group. (Pro Tip: there are several online tools to help you check for statistical significance.)

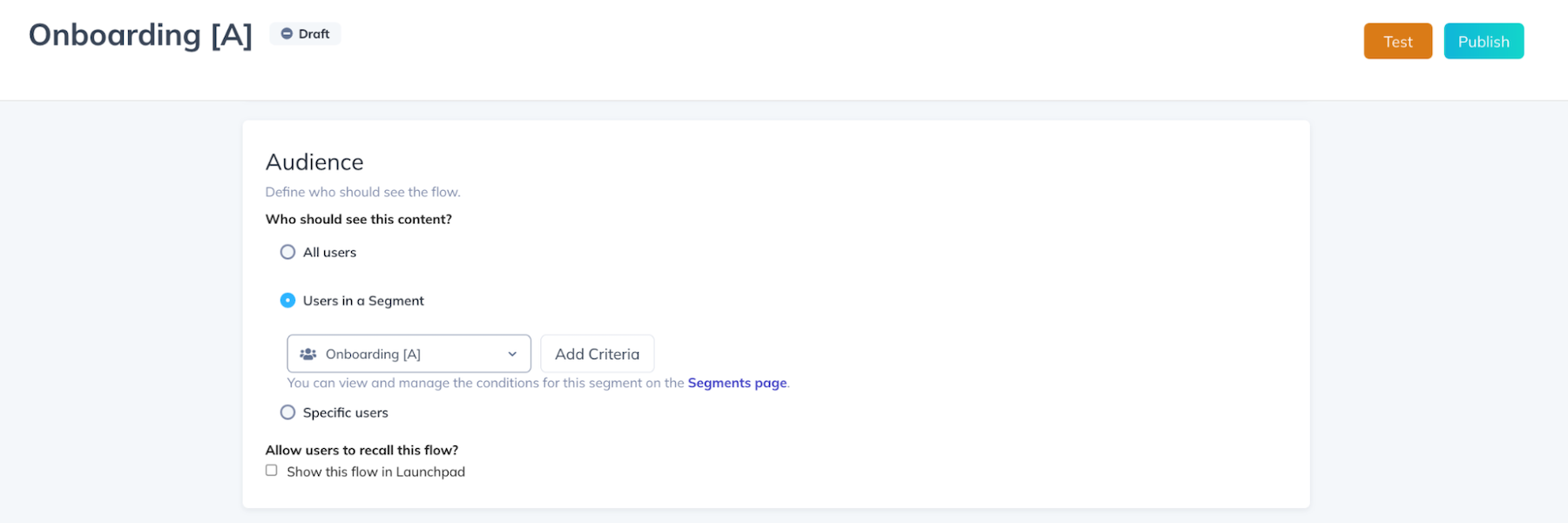

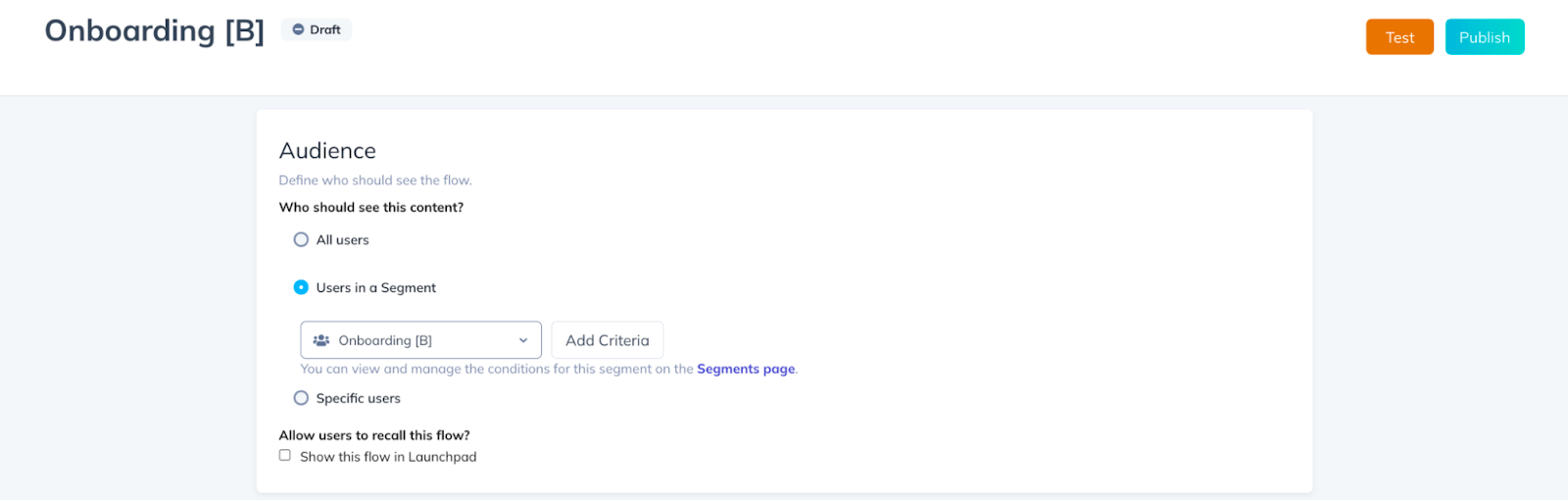

Here’s a sneak peek at how we’ve been using A/B Flow Variation testing internally to test 2 different versions of our trial welcome Flow:

1. We created 2 different Segments, Onboarding A and Onboarding B, to use alongside our trial Segment using our “Audience Randomizer” auto-property to define a percentage of users to include in the Segment.

2. Then, we created 2 different versions of trial welcome Flows and assigned one to Segment: Onboarding A, and the other to Segment: Onboarding B.

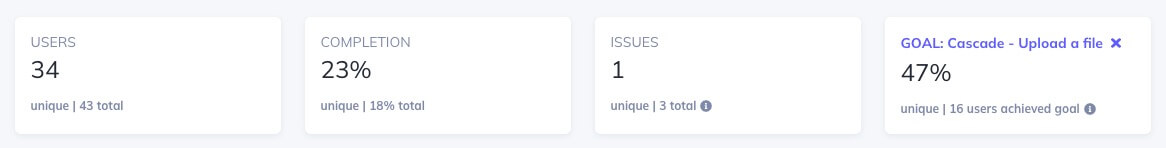

3. To monitor which Flow has the most impact, we compare the Flow conversion rate and Goal completion % for each Segment.

Ta-da! 🥳 A quick and easy way to compare Flow variations to see which one converts.

When you’re dealing with 2 variations, you might think it’s easy to set that up manually (and you’re probably right!). But once you start adding on more Flow variations, that’s where Appcues really shines. Having more granularity and not being confined to a 50/50 split test helps our customers zero in on the small changes that can drive big impact.

So if you’re itching to run an A/B/C/D test, you can split your Flows however you’d like:

Just set up 4 Flows and 4 audience segments and use our Audience Randomizer auto-property to define the percentage of users to include in each Segment. (Don’t worry—we’ve got a step-by-step guide showing you exactly how to do it in our lovely support center.)

Appcues currently supports A/B Flow Variation testing (but hang tight—Control Group Experiment tests are coming soon!)

Ready to put this newfound knowledge to the test? Don’t let that fear of failure become your competitor’s strategic advantage. Experiment often. Learn quickly. And, just keep swimming.

You got this!